Professor Layton Und Das Geheimnisvolle Dorf Nds Rapidshare Library

Abstract All too much of the data on the Web appears in unstructured presentation-centric formatting that isn't suited for structured searching and retrieval. Upconversion to a more data-centric information storage format offers a potential for many new uses of the data. The starting point of our work is a collection of HTML documents containing video game reviews. Our goal is to describe a target XML format that supports certain elements and attributes containing information that we consider valuable. Furthermore, the conversion process itself should be carried out automatically by means of an XProc pipeline.

We conclude our paper with a demonstration of typical benefits of the highly structured data that results from our conversions. Introduction Vast collections of information are stored in HTML files distributed over millions of Web pages through the Internet. Among these quite valuable data can often be found; however, HTML does not offer a large pool of semantically motivated elements or attributes for annotating arbitrary data, since the language was originally created for hypertexts. Although CSS microformats may be used to add semantic value to structuring elements (e.g. Div and span), most information is buried underneath a 'tag soup' of td, p or div elements that allow no inference about their content. In contrast, we can have information that is highly structured in terms of very specialized XML markup using a document grammar (DTD,, XSD or RELAX NG ) that allows for easy retrieving of very specific information.

A real world example where the origin of our data is a collection of (sometimes even invalid) HTML 4.01 Web pages storing documents of video game reviews is a good candidate for demonstrating how value can be added through better markup. Our goal is to transform these into fully structured and valid XML instance documents that allow different queries about the information. Since we are confronted with several hundred reviews, an automated conversion process is valuable. As an additional goal, we would like to stay in the realm of XML techniques; for example, we would like to avoid using non-XML-aware software such as general-purpose scripting languages (e.g. Perl, Python).

Information content Video games are a part of today's culture and are available in a huge variety in terms of supported game system, genre and — of course — quality. Finding a game that fits both one's hardware requirements and favored genre is a relatively easy task to accomplish, but basing the decision to buy a specific game only on the text written on the back of its case is daring at least. Impartial (more or less) reviews of video games may help to clarify if the money is well spent in the long run by providing rating systems for features such as graphics, sound, atmosphere or overall score (usually higher scores are better). The team of the German Mag'64 Web site has tested video games for over eight years, gathering over 1500 reviews, each consisting of a single HTML Web page. Each document contains information about the game being tested, the review, including a general judgement, and images and screenshots.

Professor Layton und das geheimnisvolle Dorf (NDS) Mathoxs; 44 videos; 494 views. Let's Play Professor Layton und das geheimnisvolle Dorf #006 [German].

This information is quite valuable since among the provided items are general ones such as the title, system, or publisher, but in addition more specific items such as number of players, genre, age rating and difficulty. The review consists of running text' while the final verdict and pros and cons are summarized in a tabular view. The data we have to deal with consists generally of two types of reviews, which we call 'Type A' and 'Type B'. Type A was used during the years 2001 through 2004, while Type B was introduced in the Autumn of 2004. Technical analysis From a technical point of view the data is stored in HTML Web pages.

Because HTML's original task is to structure hypertexts, it lacks specific elements and attributes for annotating the information we are interested in. Furthermore, the markup of our test data is very focussed on presentation, that is, general HTML elements such as div, p, td are used for physically structuring the information according to a given layout. While the two review types, A and B, do not differ regarding their information content, there are differences in the markup techniques used.

Type A The Type A review was originally used as part of an HTML frameset. While one frame contained a menu for navigating through the whole service, the second frame stored a single review in the form of a HTML Page. This page lacks an HTML Doctype declaration, and typical copy and paste errors can be found, including end tags without preceding start tags, wrong attributes, etc. The img element for embedded graphics lacks the required alt attribute. Furthermore, no information about the character encoding is given, which leads to encoding errors since German umlauts and other special characters were used. Shows an excerpt of an Type A review. Mag64 SYSTEM: GCN - PAL ENTWICKLER: Ubi Soft GENRE: Jump'n Run SPIELER: 1-4 Spieler This markup we have to deal with is very presentation-focussed: semantic markup such as h1 or h2 that could be used for structuring the text is not used at all.

The title of the game can only be found in the running text or in the graphic image referred by the img element — and sometimes in external cheats or tricks documents that are referred to from the review page (the term 'CHEATS: JA' in ). All useful information is buried deep inside HTML's table elements, and the page lacks any meta elements for storing additional information. Spacing between different parts of the text was introduced by using HTML's entity, while the whole markup is layout oriented, using font, i and u elements. Sometimes font elements with identical formatting options are embedded into each other resulting in a tag soup. Emphases are arranged solely by selecting 'size 3' fonts. The running text of the review is distributed among different table elements, establishing a print-like layout.

Each review begins with two blocks containing meta-information, such as system, genre, number of players, etc. Type B The Type B reviews were established in the Autumn of 2004, coinciding with the release of the Nintendo DS® handheld console.

Since this videogame console introduced some features that were unknown before (e.g. Split-screen and the stylus input device), a new HTML template for reviewing video games was adapted.

As a new meta-information item, an age rating was added, and the running text was subdivided by headings. Most of the HTML pages contain a doctype declaration (incorrect for HTML 4.01), a reference to an externally declared CSS stylesheet and information about the character encoding (ISO-8859-1 — although the specified encoding is sometimes not correct, since some documents are encoded using the Windows-1252 charset or even UTF-8). In addition to the external CSS file, local formatting using attributes such as marginwidth, bg-color or border can still be found. In general, the HTML pages are not valid according to the W3C validation service. Shows the mixture of different formatting options used.

A RELAX NG schema (in combination with the XML schema datatype library) would have been another option, however, the broader support for XML schema supplied by the XSLT processor used during the conversion process tipped the scales for us (). Each game can be identified by a unique xml:id attribute, further optional attributes correspond to genre and subgenre, supporting an enumerated list of possible values which should help avoiding typical errors such as typos. Children of the game element are the title and platforms elements, the latter consisting of at least either one handheldGameConsole or videoGameConsole, allowing to combine reviews of the same video game released on multiple platforms. Both elements are derived by extension of the globally declared complexType consoleType, sharing common information present in stationary and handheld game consoles (see for a graphical overview of the shared information). The release element stores information about the date of release (using an xs:date Type Attribute), the different languages and price. Children of the languages element are spoken, text and handbook elements, depicting information about the parts of the game that have been translated.

The price element has a currency attribute that uses an enumerated list of possible values according to. An optional image element can be used to represent box pictures or screenshots of the game reviewed.

As mentioned above, the handheldGameConsole and videoGameConsole elements are derived from the complexType consoleType by extension. Although the additional elements techSpecs and saving use the same names, their content models are different with respect to the video game console, since, for example, the requirements for storing save games are different between handheld and stationary consoles.

Only the videoGameConsole element allows for the compatibleInputDevices child element. Most of these elements use enumerated lists to eliminate possible typos and to ease the acquisition of new reviews. The main part of the review is stored underneath the review element that consists of the mainText and conclusion elements and further optional screenshots and that has a date attribute and an author attribute group. The running text is subdivided into optional headers and paragraphs, allowing a fine grained division of text parts and representing both review types.

The conclusion element is used to store both further text (e.g. In a form of a final verdict similar to the Type B reviews) and the tabular-like lists of pros and cons, followed by the final score element. Scoring can be expressed either via numeric values (using the percent child element with its attributes graphics, sound (optional), multiplayer (optional) and overall) or through text, since both variants can be found in our sample data. This grammar can not only be used to store the information coded in both review types but also is highly flexible for future extensions.

Possible future extensions of the schema may include XSD 1.1 assertions, for example, to ensure that multiplayer scoring information is only allowed when the maximum number of players is greater than '1'. XSLT 2.0 benefits In his paper 'Up-conversion using XSLT 2.0' Michael Kay points out the great advances XSLT made when shifting to XSLT 2.0, and he provides a real-world example that makes heavy use of the new features. The key features which produce benefit for upconversion are in short schema-awareness, support for regular expression processing, better manipulation of strings, and advanced grouping possibilities. So tasks that formerly were often solved by using a general purpose scripting language like Perl or Python, by loading XML modules can be done equally well or better with XSLT 2.0 [See for an elaborated example]. Our upconversion of the reviews mostly makes use of regular expression processing and string manipulation. The documents are preprocessed into well formed XML using HTML Tidy. For the upconversion, both functions as well as named templates are used widely.

The following snippet demonstrates the massive clean-up the stylesheet performs. It is taken from the extensive main template, which uses a variable to hold the string with information about the genre of the reviewed game (). This string is checked for both Type A and Type B data equally but it is applied differently with respect to the structure.

This variable is then checked against regular expressions to assign the respective value from the defined enumerated list. Demonstrates the assignment of some genres and a sub genre, implemented using case differentiation that takes advantage of the order of the test expressions. Action-Adventure Sport Action First Person Action Because the data varies a lot throughout the transformation, many case differentiations are used. To find the title of some documents information stored into external documents has to be taken into account. In, a linked 'cheats' or 'tips' document is accessed to extract the game title that is hidden in the backlink to the review document. Throughout the transformation many more requirements are met in carrying out the upconversion.

The examples above are simply illustrative of the process without going into complete detail. Torrent Italiani 2016 on this page. Pipelining with XProc XProc a new standard for automating processes like ours through an XML pipeline has been developed by the W3 working group. It has reached the status of W3C Recommendation on 11 May 2010 after being advanced to Proposed Recommendation in March 2010. The specification had been downgraded from Candidate Recommendation to Working Draft again in January to solve some issues. It has reached a fairly stable level now, and a book on XProc by Norman Walsh is in progress.

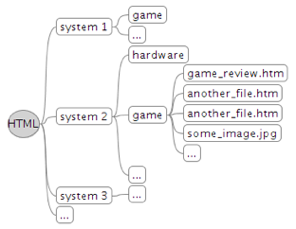

For our desired all-in-one XML solution, XProc is first choice to handle the pipeline. The pipeline should process the documents that are stored locally in the filesystem recursively (). There are documents other than game reviews (e.g. Cheats and tricks), and we need some of them to extract the titles of games, but most of these documents are discarded. One problem here is that while we can say from the filename what is most likely not a test, but not what actually is. • Use HTML Tidy to transform the HTML input into well-formed XML • Apply the XSLT script to the output of the former task using an XSLT 2.0 processor • Validate the output files according to the XML schema • Separate valid from invalid documents • Provide a log of valid documents XProc suits these needs well, and, as an XML language, ensures perfect XML compatibility. For processing we use XML Calabash version 0.9.21.

As another option, Calumet 1.0.11, was taken into account, but since Calumet currently does not support XPath 2.0, we stick to XML Calabash. We prepared the documents so the encoding of the files is either ISO-8859-1 or UTF-8 and the special characters are masked as numeric entities for the moment. Otherwise there would be encoding errors in the result XML documents. Since the pipeline shall take HTML documents as input and shall process all of them in sequential order some preparatory steps are used to make the documents accessible inside the XML pipeline. Provides a simplified overview of the first steps of the pipeline.

To advance deeper into the structure we use nested p:for-each loops; of course, the output port needs to be set to accept sequences. Next we list the subdirectories, consisting mainly of game-folders (). Now we loop over the game-folders (not shown due to space restrictions) and prepare the files for accessibility. First we add the base-uri to get the complete filepath using p:make-absolute-uris. Then we add slashes using p:string-replace to ensure accordance to the file protocol. To make sure the file is accessible for the p:http-request step we rename the element c:file to c:request.

Furthermore, we need to add the proper attributes for the p:http-request step to work. Since there is no server involved and we do not want to work with binary data, we need to add the attribute override-content-type and attach the value text/html (). Now we can process the HTML documents in sequence. We use a filter to exclude documents which are not reviews and will not help us to find game titles (). These documents may be reader reviews that follow no certain structure, hardware reviews, or other texts. Files that may help us to find missing game titles contain these abbreviations: opt chea tipp herz guid pass. For the filtered documents, the second and, therefore, the main part of the pipeline is initiated ().

If something goes wrong during the upconversion, we want to be able to check in which step and what the reason may be, so each of the main steps has its output stored apart from each other. We nest try-catch clauses to ensure the flow of the pipeline. The variable file holds the URI of each file.

It will be available throughout the loop and not only serve to get each file but to store each file in its given folder. So first we convert these files that pass the filter through HTML Tidy via p:exec, which can take non-XML input and provides safety (). We could use p:unescape-markup in conjunction with Tagsoup 1.2 or HTML Tidy as an alternative solution here, but as XML Calabash so far only implemented Tagsoup for reading HTML and the results from HTML Tidy and Tagsoup differ slightly, we stick to p:exec. Calumet supports both HTML Tidy and Tagsoup for this step, but as we are using XPath 2.0 we cannot use this option. We set source-is-xml to false and result-is-xml to true. By default, result lines are wrapped, and the output of this step is also wrapped to ensure wellformed XML documents on the output port.

We negate wrap-result-lines and unwrap the output of the step. (Note that the arguments for HTML Tidy need to be in a single line.).

The output of this step is saved to folder 'Tidied' as 'filename.xml' and chained to the next step p:xslt. As a precaution, this step along with the connected saving procedure is encapsulated into a try group. If any of this fails, we record the tidied file to the folder 'Transform-failed'. The p:xslt step takes three input ports, one for the stylesheet, one for the XML document and one for parameters (). The filepath needs to be provided to the stylesheet to ensure reaching the documents that will be consulted for missing titles. The filename and system folder are processed inside the transformation as well.

If the transformation and the saving process can be executed successfully, the output of this step serves as input for p:validate-with-xml-schema (). Depending on the output of this step, the documents are saved separately. Valid documents can be found in the 'Schema-Valid' folder and the invalid in the 'Schema-Invalid' folder. (During the programming of the XSLT-Transformation, invalid documents give hints for expressions in need of improvement.). The last steps of the pipeline follow after the loops and take the result of the loop started in. Here we create an XML document which takes the c:result elements returned by the step directoryloop and lists them for an overview (). Wrap result for info.

This pipeline takes approximately half an hour to process the data, and is relatively independent of CPU speed on an average actual system. It results in 1573 schema-valid files. The result of the upconversion process shows an excerpt of an instance coded in the target output format according to the XML schema. The critical information is marked up with the help of appropriate elements or attributes.

Conversions of a game (i.e., the release on different platforms) are supported, as well, by separating the general information such as title and genre from the platform for which the review is written. The verdict contains the list of 'pro' and 'con' items and the score (depending on the input review type, subdivided into single figures for game graphics, sound, multiplayer and overall) in a highly-structured form that allows easy access to relevant criteria. Rayman3 Hoodlum Havoc Ubi Soft 60 PAL GCN-GBA-Link Gamecube Controller GBA Bisher hat uns Ubi Soft ja (.) Durch den Score werden (.) (.) Unterhaltsames Gameplay Ende wird zu schnell erreicht. Benefits of highly structured data — searching for the game according to your flavour The result instances of the automatic upconversion process discussed in the contains highly structured information. All relevant and important data that was formerly hidden inside HTML's table element or as part of the running text can be accessed via XPath or XQuery expressions, allowing for easy retrieval of reviews of games of certain types or according to certain criteria such as genre, price, and score.

While the original structure of the Mag'64 Web site offered access to the review based on either the video game system or the name of the game, a full-text search engine was not implemented. We have developed some sample XQuery queries that allow for a different kind of retrieval of game reviews. Alternative access to the reviews The query genres.xq uses two parameters, genre and platform, to search for games of a certain genre on a specific platform by using a collection of all valid XML instance documents. Shows the output of the genres.xq with the value 'Wii' for the platform parameter and the value 'Puzzle' supplied for the genre paramater. Since this query was originally developed as a alternative access mechanism, the information returned is very sparse. However, in combination with (X)HTML output containing hyperlinks to the respective review page, it would be sufficient.

A more elaborated example: a wish list Kids love video games these days, and often they leave their parents behind when it comes to choosing the right game for a present. We will demonstrate the benefits of highly structured data in this example. Consider a seven-year-old child with a Nintendo DS® who wants to get a racing game for his system.

The parents might agree but formula additional constraints: the game to be bought should have a score of at least 70% and should be appropriate for kids of his age. Furthermore, the difficulty should not be too high. For this query different parameters have to be taken into account: the platform, the genre, age rating, score, and difficulty. The shoppingList.xq query provides all these parameters ().

Using Saxon as XQuery processor with the following call results in the output shown in. Conclusion The results of our work are of many kinds: first, the newly introduced features such as regular expressions and string manipulations qualify XSLT 2.0 as a full-fledged conversion tool for transforming weak structured data into a highly structured format. Second, if a transformation process has to be carried out multiple times and if other processing is involved, automation by using the XProc pipelining language is highly recommended. Both the XProc specification and the supporting software tools are ready for a productive environment. Furthermore, the output of the upconversion clearly shows a high potential in terms of flexibility and of the ability to retrieve certain information, as shown by our example applications using XQuery.

We are certain that minor problems such as the one caused by the character encoding will be fixed during the ongoing development of XProc software. From our point of view, future modifications could result in a XSD 1.1 compatible XML schema supporting more video game systems or textual content that is not review related, such as cheats, hints, or walk-throughs. Both the XSLT script and the XQuery queries could be modified in how they interact with each other. For example, the distinction of different cases that is carried out by the XSLT script could be reformulated as pipeline step, allowing for a more maintainable XSLT script. In general, the realization of the pipeline and query system as a Web service in conjunction with a native XML database would result in an alternative search and retrieval mechanism that would indeed search for the game according to your flavour.

(c) 2010 Nintendo // Level-5 A backup ROM in case your original copy of the game got damaged. DO NOT DOWNLOAD THIS ROM IF YOU HAVE NOT BOUGHT THIS GAME! Please respect other peoples copyright and support the developers.

============================== US-Version: [5200 -- Professor Layton and the Unwound Future (U) (VENOM)] ============================== French-Version: [5277 - Professeur Layton et le Destin Perdu (FRA) (EXiMiUS)] ============================== German-Version: [5279 - Professor Layton und die verlorene Zukunft (GER) ABSTRAKT] ============================== Italian-Version: ============================== Professor Professeur Layton and the Unwound Future et le Destin Perdu NDS ROM Nintendo DS free rapidshare megaupload fast backup.